Multi-timescale drowsiness characterization based on a video of a driver's face

Quentin Massoz, Jacques G. Verly, and Marc Van Droogenbroeck

Drowsiness is a major cause of fatal accidents, in particular in transportation. It is therefore crucial to develop automatic, real-time drowsiness characterization systems designed to issue accurate and timely warnings of drowsiness to the driver. In practice, the least intrusive, physiology-based approach is to remotely monitor, via cameras, facial expressions indicative of drowsiness such as slow and long eye closures. Since the system's decisions are based upon facial expressions in a given time window, there exists a trade-off between accuracy (best achieved with long windows, i.e. at long timescales) and responsiveness (best achieved with short windows, i.e. at short timescales). To deal with this trade-off, we develop a multi-timescale drowsiness characterization system composed of four binary drowsiness classifiers operating at four distinct timescales (5s, 15s, 30s, and 60s) and trained jointly. We introduce a multi-timescale ground truth of drowsiness, based on the reaction times (RTs) performed during standard Psychomotor Vigilance Tasks (PVTs), that strategically enables our system to characterize drowsiness with diverse trade-offs between accuracy and responsiveness. We evaluated our system on 29 subjects via leave-one-subject-out cross-validation and obtained strong results, i.e. global accuracies of 70%, 85%, 89%, and 94% for the four classifiers operating at increasing timescales, respectively.

Description of the drowsiness dataset

We collected data from 35 young, healthy subjects (21 females and 14 males) with ages of 23.3±3.6 years (mean ± standard deviation), and free of drug, alcohol, and sleep disorders. The subjects were acutely deprived of sleep for up to 30 hours over two consecutive days, and were forbidden to consume any stimulants. During this period, the subjects performed three 10-min PVTs: PVT1 took place at 10--11 am (day 1), PVT2 at 3:30--4 am (day 2), and PVT3 at 12--12:30 pm (day 2). The PVTs were performed in a quiet, isolated laboratory environment without any temporal cues (e.g. watch or smartphone). The room lights were turned off for PVT2 and PVT3.

We adopted the PVT implementation proposed by Basner and Dinges [1], where the subject is instructed to react as fast as possible (via a response button) to visual stimuli occurring on a computer screen at random intervals (ranging from 2 to 10 seconds). During each 10-min PVT, we recorded the RTs (in milliseconds), as well as the near-infrared face images (at 30 frames per second) via the Microsoft Kinect v2 sensor.

We make the full dataset (of 88 PVTs, some were lost due to technical issues) available alongside the present article. However, for reasons of privacy, we only provide, in the dataset, (1) the RTs and (2) the intermediate representations of our system (i.e. the sequences of eyelids distances), and not the near-infrared face images.

Our multi-timescale drowsiness characterization system

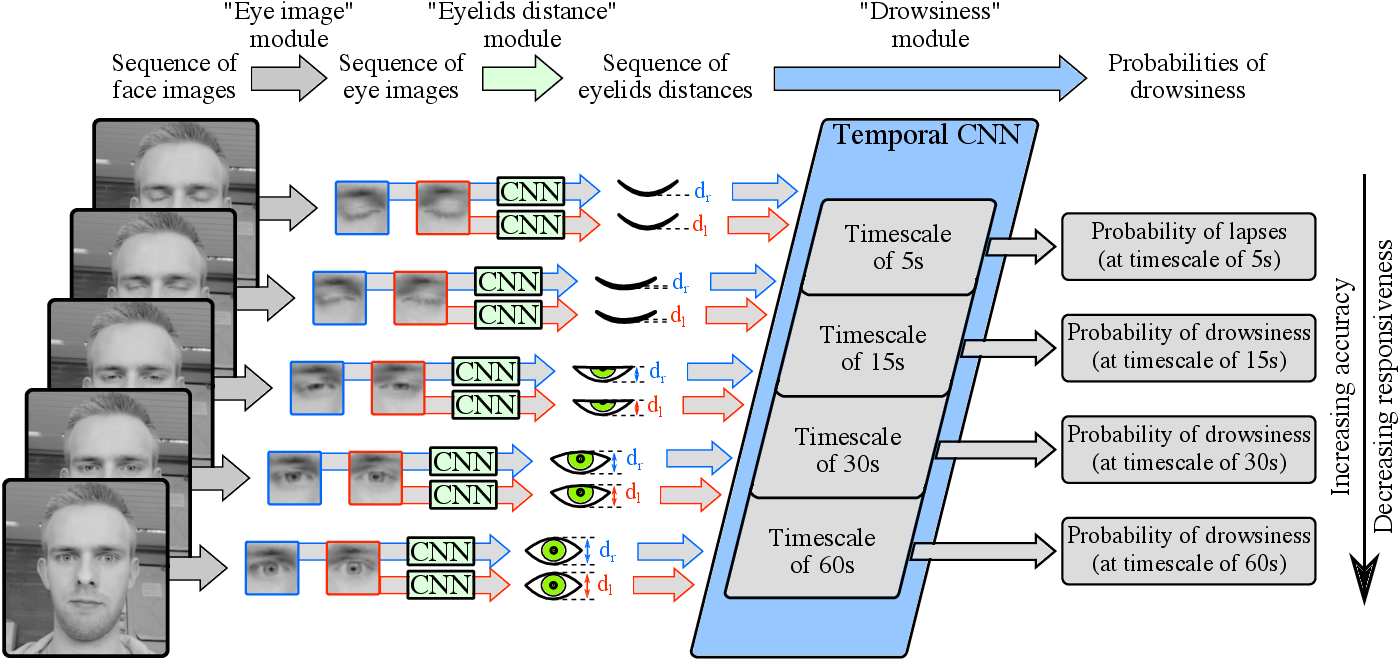

Our multi-timescale drowsiness characterization system consists of a succession of three processing modules, and operates on any given 1-min sequence of face images. First, from each face image, the “eye image” module produces two eye images (left and right) via off-the-shelf algorithms. Second, from each eye image, the “eyelids distance” module produces the eyelids distance via a convolution neural network (CNN). Third, from the 1-min sequence of eyelids distances and via a temporal CNN, the “drowsiness” module (1) extracts features related to the eye closure dynamics at four timescales, i.e. the four most-recent time windows of increasing lengths (5s, 15s, 30s, and 60s), and (2) produces four probabilities of drowsiness with diverse trade-offs between accuracy and responsiveness.

Links

- The article: Sensors, ORBi.

- Data, code, trained models of our system: GitHub.

- Data, code of the proxy system: GitHub.

Credits

If you use our code, we would appreciate that you cite [2]:

@article{Massoz2018MultiTimescale,

title = {Multi-Timescale Drowsiness Characterization Based on a Video of a Driver's Face},

author = {Q. Massoz and J. G. Verly and M. {Van Droogenbroeck}},

journal = {Sensors},

publisher = {MDPI},

volume = {18},

number = {9},

pages = {1-17},

year = {2018},

month = {August},

doi = {10.3390/s18092801},

url = {http://www.mdpi.com/1424-8220/18/9/2801},

keywords = {drowsiness; driver monitoring; multi-timescale; eye closure dynamics; psychomotor vigilance task; reaction time; convolutional neural network}

}

References

[1] . Maximizing Sensitivity of the Psychomotor Vigilance Test (PVT) to Sleep Loss. Sleep, 34(5):581-591, 2011.

[2] . Multi-Timescale Drowsiness Characterization Based on a Video of a Driver's Face. Sensors, 18(9):1-17, 2018. URL http://www.telecom.ulg.ac.be/mts-drowsiness.