Summarizing the performances of a background subtraction algorithm measured on several videos

A. PiΓ©rard and M. Van Droogenbroeck

University of Liège

Full paper in PDF

How to cite this reference: A. PiΓ©rard and M. Van Droogenbroeck.

Summarizing the performances of a background subtraction algorithm measured on several videos. In

IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, October 2020. [

BibTeX entry]

Summary

There exist many background subtraction algorithms to detect motion in videos. To help comparing them, datasets with ground-truth data such as CDNET or LASIESTA have been proposed. These datasets organize videos in categories that represent typical challenges for background subtraction. The evaluation procedure promoted by their authors consists in measuring performance indicators for each video separately and to average them hierarchically, within a category first, then between categories, a procedure which we name “summarization”. While the summarization by averaging performance indicators is a valuable effort to standardize the evaluation procedure, it has no theoretical justification and it breaks the intrinsic relationships between summarized indicators. This leads to interpretation inconsistencies. In this paper, we present a theoretical approach to summarize the performances for multiple videos that preserves the relationships between performance indicators. In addition, we give formulas and an algorithm to calculate summarized performances. Finally, we showcase our observations on CDNET 2014.

Keywords: performance summarization, background subtraction, multiple evaluations, CDNET, classification performance

1βIntroduction

A plethora of background subtraction algorithms have been proposed in the literatureΒ

[15, 17, 16, 14, 13, 1, 11]. They aim at predicting, for each pixel of each frame of a video, whether the pixel belongs to the background, free of moving objects, or to the foreground. Background subtraction algorithms have to operate in various conditions (viewpoint, shadows, lighting conditions, camera jitter,

etc). These conditions are covered by different videos in evaluation datasets, such as CDNETΒ

[10, 9] or LASIESTAΒ

[2].

Measuring the performance on a single video is done at the pixel level, each pixel being associated with a ground-truth class y (either negative cβββ for the background, or positive cβ+β for the foreground) and an estimated class yΜ (the temporal and spatial dependences between pixels are ignored). Performance indicators adopted in the field of background subtraction are mainly those used for two-class crisp classifiers (precision, recall, sensitivity, F-score, error rate, etc).

To compare the behavior of algorithms, it is helpful to “

summarize” all the performance indicators that are originally measured on the individual videos. However, to the best of our knowledge, there is no theory for this summarization process, although an attempt to standardize it has been promoted with CDNET. In CDNET, videos are grouped into 11 categories, all videos in a given category having the same importance, and all categories having also the same importance. In other words, the videos are weighted regardless of their size. The standardized summarization process of CDNET is performed hierarchically by computing an arithmetic mean, first between the videos in a category, then between the categories. This averaging is done for seven performance indicators, one by one, independently. While the procedure of CDNET is valuable, it has two major drawbacks related to the interpretability of the summarized values.

First, the procedure breaks the intrinsic relationships between performance indicators. The case of the M4CD algorithm, as evaluated on CDNET, topically illustrates this inconsistency. Despite that the F-score is known to be the harmonic mean of precision and recall, the arithmetic mean of the F-scores across all videos is

0.69 while the harmonic mean between the arithmetically averaged precisions and recalls is

0.75. The difficulty to summarize F-scores has already been discussed inΒ

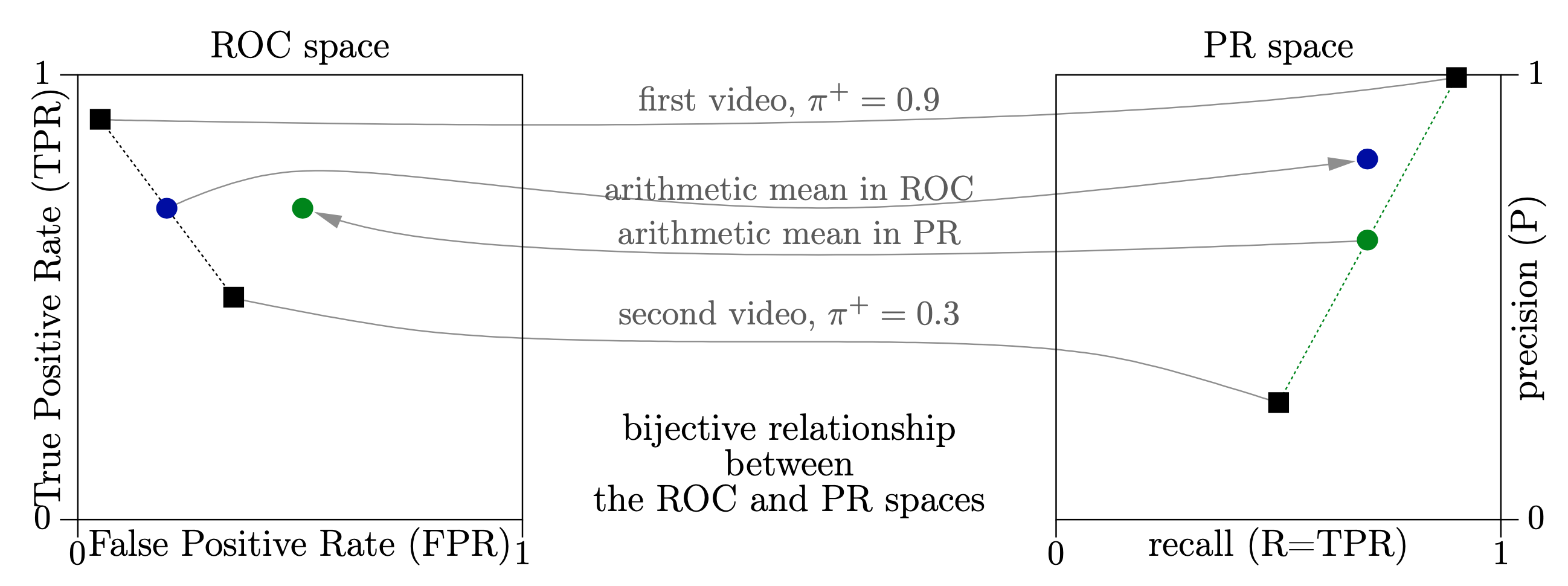

[4], when averaging between folds in cross-validation. Such a problem also occurs for other indicators. Moreover, FigureΒ

1β shows that the summarization with the arithmetic mean breaks the bijective relationship between the ROC and

PR spacesΒ

[6]. It is not equivalent to summarize the performances in one space or the other. In particular, one could obtain a meaningless arithmetically averaged performance point, located in the unachievable part of the PR spaceΒ

[7] (the achievable part is not convex). This leads to difficulties for the interpretation and, eventually, to contradictions between published summarized results.

Second, some indicators (such as the precision, recall, sensitivity, or error rate) have a probabilistic meaningΒ

[3]. Arithmetically averaging these indicators does not lead to a value that preserves the probabilistic meaning. This leads to interpretability issues. Strictly speaking, one cannot think in terms of probabilities unless a random experiment is defined very precisely, as shown by Bertrandβs paradoxΒ

[5].

This paper presents a better summarization procedure that avoids these interpretability issues. In SectionΒ

2β, we present indicators to measure the performance of an algorithm applied on a single video and pose the random experiment that underpins the probabilistic meaning of these indicators. Then, in SectionΒ

3β, we generalize this random experiment for several videos, and show that the resulting performance indicators can be computed based only on the indicators obtained separately for each video. In other words, we have established a theoretical model for summarization that guarantees a consistency between the indicators resulting from both random experiments. In SectionΒ

4β, we showcase our new summarization procedure on CDNET and discuss how it affects the ranking between algorithms. SectionΒ

5β concludes the paper.

2βPerformance indicators for one video

The performance of a background subtraction algorithm on a video is measured by running it once (even for non-deterministic algorithms), and counting at the pixel level the amounts of true negatives TN (yβ=βyΜβ=βcβββ), false positives FP (yβ=βcβββ and yΜβ=βcβ+β), false negatives FN (yβ=βcβ+β and yΜβ=βcβββ), and true positives TP (yβ=βyΜβ=βcβ+β).

The performance indicators are then derived from these amounts. For example, the prior of the positive class is Οβ+ββ=β(FNβ+βTP)/(TNβ+βFPβ+βFNβ+βTP), the rate of positive predictions is Οβ+ββ=β(FPβ+βTP)/(TNβ+βFPβ+βFNβ+βTP), the error rate is ERβ=β(FPβ+βFN)/(TNβ+βFPβ+βFNβ+βTP), the true negative rate (specificity) is TNRβ=β(TN)/(TNβ+βFP), the false positive rate is FPRβ=β(FP)/(TNβ+βFP), the false negative rate is FNRβ=β(FN)/(FNβ+βTP), the true positive rate (recall) is TPRβ=βRβ=β(TP)/(FNβ+βTP), the positive predictive value (precision) is PPVβ=βPβ=β(TP)/(FPβ+βTP), and the F-score is Fβ=β(2βTP)/(FPβ+βFNβ+β2βTP).

The

(FPR,β TPR) coordinates define the well-known Receiver Operating Characteristic (ROC) spaceΒ

[12, 18]. The precision

P and recall

R define the

PR evaluation spaceΒ

[7], which is an alternative to the ROC space, as there exists a bijection between these spaces, for a given prior

Οβ+βΒ

[6]. In fact, this bijection is just a particular case of the relationships that exist between the various indicators. There are other famous relationships. For example, the F-score is known to be the harmonic mean of the precision

P and recall

R.

Despite its importance for the interpretation, it is often overlooked that some indicators have a probabilistic meaningΒ

[3]. Let us consider the following random experiment.

Random Experiment 1 (for one video) Draw one pixel at random (all pixels being equally likely) from the video and observe the ground-truth class Y as well as the predicted class YΜ for this pixel. The result of the random experiment is the pair Ξβ=β(Y,βYΜ).

We use capital letters for

Y,

YΜ, and

Ξ to emphasize the random nature of these variables. The outcome of this random experiment can be associated with a true negative

tnβ=β(cβββ,βcβββ), a false positive

fpβ=β(cβββ,βcβ+β), a false negative

fnβ=β(cβ+β,βcβββ), or a true positive

tpβ=β(cβ+β,βcβ+β). The family of

probabilistic indicators can be defined based on this random experiment:

It includes

Οβ+ββ=βP(Ξβββ{fn,βtp}),

Οβ+ββ=βP(Ξβββ{fp,βtp}),

ERβ=βP(Ξβββ{fp,βfn}),

TNRβ=βP(Ξβ=βtnβ£Ξβββ{tn,βfp}),

TPRβ=βP(Ξβ=βtpβ£Ξβββ{fn,βtp}), and

PPVβ=βP(Ξβ=βtpβ£Ξβββ{fp,βtp}). All the other performance indicators (the F-score, balanced accuracy,

etc) can be derived from the probabilistic indicators.

3βSummarizing the performance indicators for several videos

Let us denote a set of videos by V. We generalize the random experiment defined for one video to several videos as follows.

Random Experiment 2 (for several videos) First, draw one video V at random in the set V, following an arbitrarily chosen distribution P(V). Then, draw one pixel at random (all pixels being equally likely) from V and observe the ground-truth class Y and the predicted class YΜ for this pixel. The result of the experiment is the pair Ξβ=β(Y,βYΜ).

As the result of this second random experiment is again a pair of classes, as encountered for a single video (first random experiment), all performance indicators can be defined in the same way. This is important for the interpretability, since it guarantees that all indicators are coherent and that the probabilistic indicators preserve a probabilistic meaning.

The distribution of probabilities P(V) parameterizes the random experiment. It can be arbitrarily chosen to reflect the relative weights given to the videos. For example, one could use the weights considered in CDNET. An alternative consists in choosing weights proportional to the size of the videos (the number of pixels multiplied by the number of frames). This leads to identical distributions P(Ξ) between the second random experiment and the first one with the fictitiously aggregated video βͺvβββVv.

We denote by I(v) the value of an indicator I obtained with our first random experiment on a video vβββV, and by I(V) the value of I resulting from our second random experiment applied on the set V of videos.

We claim that the indicators

I(V) summarize the performances corresponding to the various videos, because they can be computed based on some

I(v), exclusively. To prove it, let us consider a probabilistic indicator

IAβ£β¬ defined as

P(ΞβββAβ£Ξββββ¬), and

I⬠as

P(Ξββββ¬). Thanks to our second random experiment, we have

with

Thus, the summarized probabilistic indicator

IAβ£β¬(V) is a weighted arithmetic mean of the indicators

{IAβ£β¬(v)β£vβββV}, the weights being the (normalized) product between the relative importance

P(Vβ=βv) given to the video

v and the corresponding

Iβ¬(v):

In the particular case of an unconditional probabilistic indicator

IAβ=βIAβ£{tn,βfp,βfn,βtp}, EquationΒ (

4β) reduces to

Note that we are able to summarize probabilistic indicators that are undefined for some, but not all, videos. The reason is that

IAβ£β¬(v) is undefined only when

Iβ¬(v)β=β0, and we observe that only the product of these two quantities appears in EquationΒ (

4β). This product is always well defined since it is an unconditional probability (

Iβ¬(v) IAβ£β¬(v)β=βIAβ©β¬(v)). To emphasize it, we rewrite EquationΒ (

4β) as

Applying our summarization in the ROC

(FPR,βTPR) and PR

(Rβ=βTPR,βPβ=βPPV) spaces.

Let us consider the case in which

Οβ+β,

Οβ+β,

FPR,

TPR, and

PPV are known for each video. The summarized indicators can be computed by EquationsΒ (

4β)-(

5β), since

Οβ+ββ=βI{fn,βtp},

Οβ+ββ=βI{fp,βtp},

FPRβ=βI{fp}β£{tn,βfp},

TPRβ=βI{tp}β£{fn,βtp}, and

PPVβ=βI{tp}β£{fp,βtp}. This yields:

The passage from ROC to PR is given by:

A generic algorithm for the computation of any summarized indicator.

Let us assume that the elements of the normalized confusion matrix (I{tn}, I{fp}, I{fn}, and I{tp}) can be retrieved for each video. This could be done by computing them based on other indicators. In this case, an easy-to-code algorithm to summarize the performances is the following:

stepΒ 1: arbitrarily weight the videos with P(V),

stepΒ 2: retrieve I{tn}(v), I{fp}(v), I{fn}(v), and I{tp}(v) for each video v,

stepΒ 3: then compute

I{tn}(V),

I{fp}(V),

I{fn}(V), and

I{tp}(V) with EquationΒ (

5β),

stepΒ 4: and finally derive all the desired summarized indicators based on their relationships with the

I{tn},βI{fp},βI{fn},βI{tp} indicators. For example,

F(V)β=β(2βI{tp}(V))/(I{fp}(V)β+βI{fn}(V)β+β2βI{tp}(V)) .

4βExperiments with CDNET 2014

We illustrate the summarization with the CDNET 2014 datasetΒ

[9] (organized in 11 categories, containing each 4 to 6 videos). We recomputed all the performance indicators for the 36 unsupervised background subtraction algorithms (we could consider all the algorithms as well) whose segmentation maps are given on

http://changedetection.net, compared to the ground-truth segmentation maps.

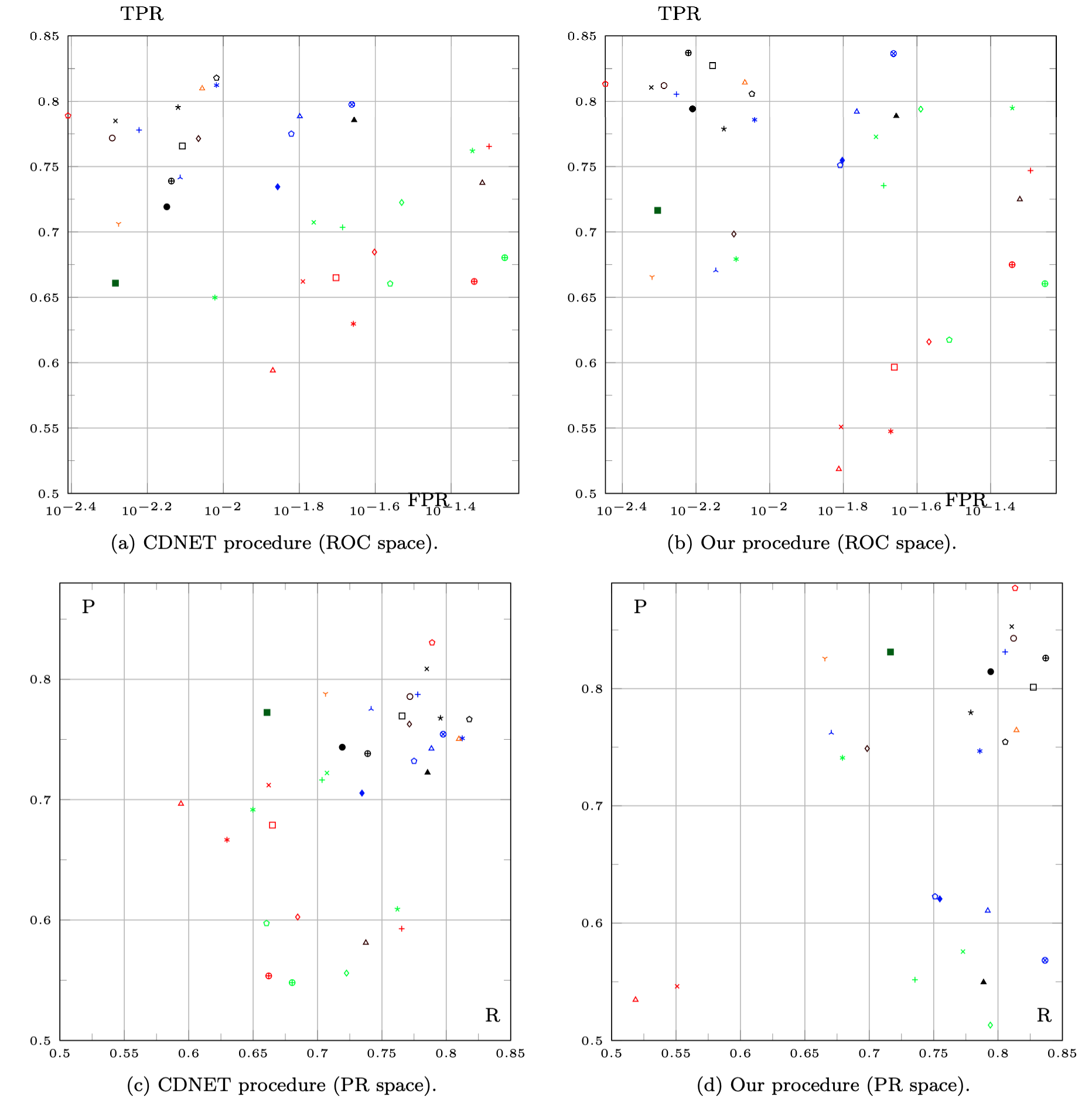

Our first experiment aims at determining if the summarized performance is \strikeout off\xout off\uuline off\uwave offsignificantly

\xout default\uuline default\uwave default \strikeout off\xout off\uuline off\uwave offaffected by the summarization procedure

\xout default\uuline default\uwave default. FigureΒ 2β compares the results of two summarization procedures, in both the ROC and PR spaces.

-

In the original CDNET procedure, performance indicators are obtained for each category by averaging the indicators measured on each video individually. A final summarized performance is then computed by averaging the performance indicators over the categories.

-

We applied our summarization, with the same weights for each category as in the original setup; thus, for each video, we have P(Vβ=βv)β=β(1)/(11)βΓβ(1)/(M), where M is the number of videos in the corresponding category.

\strikeout off\xout off\uuline off\uwave offIt can be seen that \xout default\uuline default\uwave defaultthe results \strikeout off\xout off\uuline off\uwave offdiffer significantly (both in ROC and PR), which emphasizes the influence of the summarization procedure for the comparison between algorithms. However, with the original summarization procedure, the intrinsic relationships between indicators are not preserved at the summarized level, while ours preserves them.

\strikeout off\xout off\uuline off\uwave offOur second experiment aims at determining if the ranking between algorithms depends on the summarization procedure. Because the F-score is often considered as an appropriate ranking indicator for background subtraction, we performed our experiment with it. TableΒ

1β\xout default\uuline default\uwave default shows the \strikeout off\xout off\uuline off\uwave off

F-scores and ranks obtained according to the same two summarization procedures. We see that the summarization procedure affects the ranking.

\xout default\uuline default\uwave default

|

Algorithm

|

F of [9]

|

F (ours)

|

|

IUTIS-5

|

0.7821 (2)

|

0.8312 (3)

|

|

SemanticBGSΒ [8]

|

0.8098 (1)

|

0.8479 (1)

|

|

IUTIS-3

|

0.7694 (3)

|

0.8182 (5)

|

|

WisenetMD

|

0.7559 (4)

|

0.7791 (10)

|

|

PAWCS

|

0.7478 (8)

|

0.8272 (4)

|

|

SuBSENSE

|

0.7453 (7)

|

0.7657 (12)

|

|

WeSamBE

|

0.7491 (6)

|

0.7792 (9)

|

|

SharedModel

|

0.7569 (5)

|

0.7885 (8)

|

Table 1βExtract of F-scores (ranks) obtained with two summarization procedures on CDNET.

5βConclusion

In background subtraction, algorithms are evaluated by applying them on videos and comparing their results to ground-truth references. Performance indicators of individual videos are then combined to derive indicators representative for a set of videos, during a procedure called “summarization”.

We have shown that a summarization procedure based on the arithmetic mean leads to inconsistencies between summarized indicators and complicates the comparison between algorithms. Therefore, based on the definition of a random experiment, we presented a new summarization procedure and formulas that preserve the intrinsic relationships between indicators. These summarized indicators are specific for this random experiment and for the weights given to the various videos. An easy-to-code algorithm for our summarization procedure has been given. Our procedure is illustrated and commented on the CDNET dataset.

As a general conclusion, for background subtraction involving multiple videos, we recommend to always use our summarization procedure, instead of the arithmetic mean, to combine performance indicators calculated separately on each video. By doing so, the formulas that hold between them at the video level also hold at the summarized level.

References

[1] A. Sobral, A. Vacavant. A comprehensive review of background subtraction algorithms evaluated with synthetic and real videos. Computer Vision and Image Understanding, 122:4-21, 2014. URL https://doi.org/10.1016/j.cviu.2013.12.005.

[2] C. Cuevas, E. Yanez, N. Garcia. Labeled dataset for integral evaluation of moving object detection algorithms: LASIESTA. Computer Vision and Image Understanding, 152:103-117, 2016. URL https://doi.org/10.1016/j.cviu.2016.08.005.

[3] C. Goutte, E. Gaussier. A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. Advances in Information Retrieval (Proceedings of ECIR), 3408:345-359, 2005.

[4] G. Forman, M. Scholz. Apples-to-apples in Cross-validation Studies: Pitfalls in Classifier Performance Measurement. ACM SIGKDD Explorations Newsletter, 12(1):49-57, 2010.

[5] J. Bertrand. Calcul des probabilités. Gauthier-Villars et fils, 1889.

[6] J. Davis, M. Goadrich. The Relationship Between Precision-Recall and ROC Curves. International Conference on Machine Learning (ICML):233-240, 2006.

[7] K. Boyd, V. Costa, J. Davis, C. Page. Unachievable Region in Precision-Recall Space and Its Effect on Empirical Evaluation. International Conference on Machine Learning (ICML):639-646, 2012.

[8] M. Braham, S. Piérard, M. Van Droogenbroeck. Semantic Background Subtraction. IEEE International Conference on Image Processing:4552-4556, 2017. URL http://www.telecom.ulg.ac.be/publi/publications/braham/Braham2017Semantic.

[9] N. Goyette, P.-M. Jodoin, F. Porikli, J. Konrad, P. Ishwar. A Novel Video Dataset for Change Detection Benchmarking. IEEE Transactions on Image Processing, 23(11):4663-4679, 2014. URL https://doi.org/10.1109/TIP.2014.2346013.

[10] N. Goyette, P.-M. Jodoin, F. Porikli, J. Konrad, P. Ishwar. changedetection.net: A New Change Detection Benchmark Dataset. IEEE International Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2012. URL https://doi.org/10.1109/CVPRW.2012.6238919.

[11] N. Vaswani, T. Bouwmans, S. Javed, P. Narayanamurthy. Robust Subspace Learning: Robust PCA, Robust Subspace Tracking, and Robust Subspace Recovery. IEEE Signal Processing Magazine, 35(4):32-55, 2018. URL https://doi.org/10.1109/MSP.2018.2826566.

[12] P. Flach. The Geometry of ROC Space: Understanding Machine Learning Metrics through ROC Isometrics. International Conference on Machine Learning (ICML):194-201, 2003.

[13] P.-M. Jodoin, S. Piérard, Y. Wang, M. Van Droogenbroeck. Overview and Benchmarking of Motion Detection Methods. In Background Modeling and Foreground Detection for Video Surveillance . Chapman and Hall/CRC, Jul 2014. URL http://www.crcnetbase.com/doi/abs/10.1201/b17223-30.

[14] S. Elhabian, K. El-Sayed, S. Ahmed. Moving Object Detection in Spatial Domain using Background Removal Techniques β State-of-Art. Recent Patents on Computer Science, 1:32-54, 2008. URL https://doi.org/10.2174/2213275910801010032.

[15] T. Bouwmans, F. El Baf, B. Vachon. Statistical Background Modeling for Foreground Detection: A Survey. In Handbook of Pattern Recognition and Computer Vision (volume 4) . World Scientific Publishing, January 2010. URL https://doi.org/10.1142/9789814273398_0008.

[16] T. Bouwmans, S. Javed, M. Sultana, S. Jung. Deep Neural Network Concepts for Background Subtraction: A Systematic Review and Comparative Evaluation. Neural Networks, 117:8-66, 2019. URL https://doi.org/10.1016/j.neunet.2019.04.024.

[17] T. Bouwmans. Traditional and recent approaches in background modeling for foreground detection: An overview. Computer Science Review, 11-12:31-66, 2014. URL https://doi.org/10.1016/j.cosrev.2014.04.001.

[18] T. Fawcett. An introduction to ROC analysis. Pattern Recognition Letters, 27(8):861-874, 2006.