20 years of innovation in research

|

|

|

|

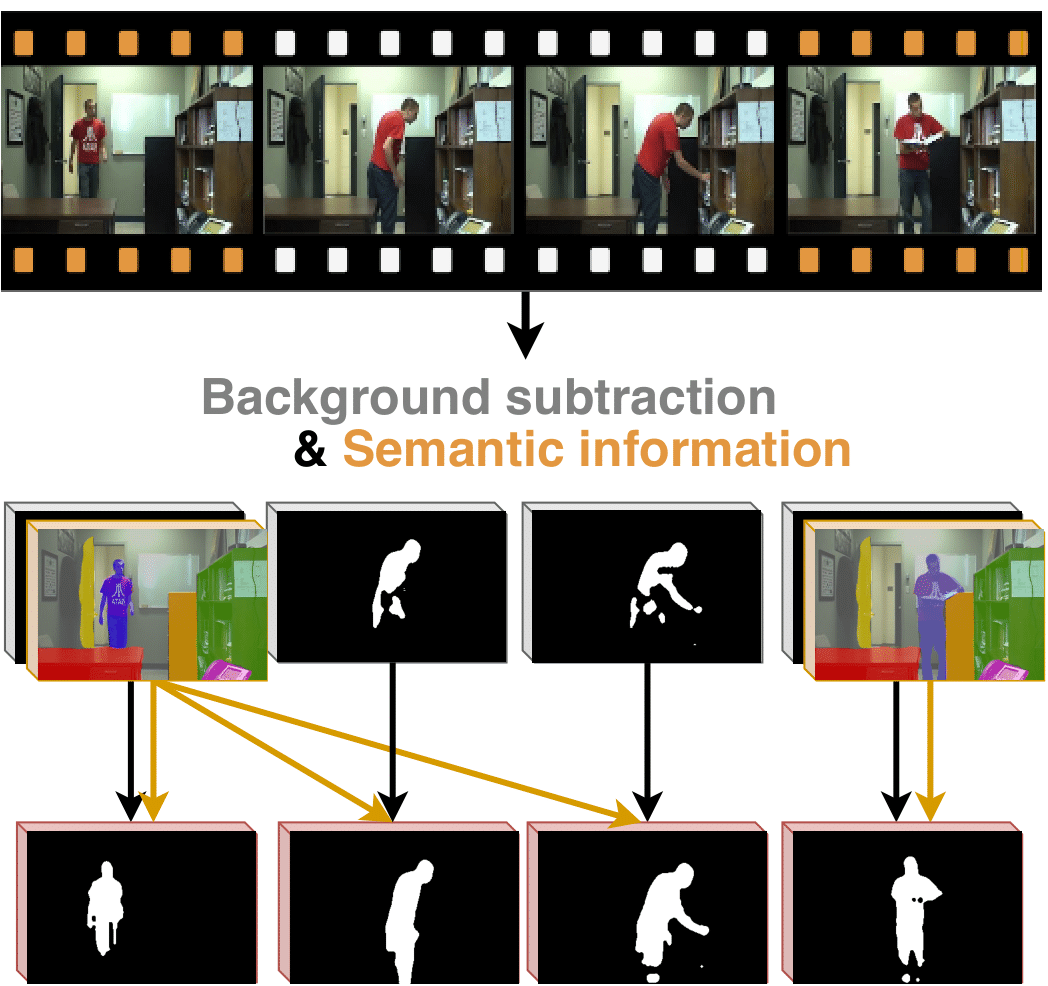

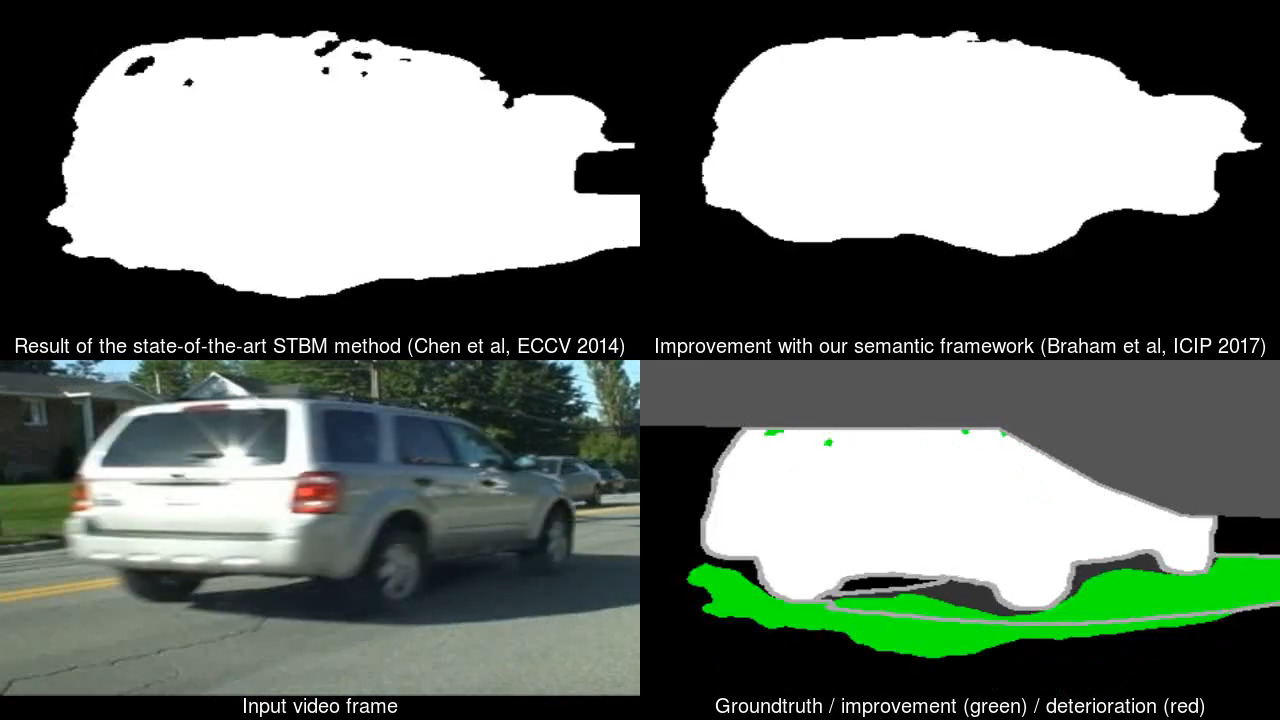

Real-Time Semantic Background Subtraction: real-time, patented extension, of our technique that combines background subtraction and semantic information, allowing to cope with all the background subtraction challenges simultaneously.

Innovation (covered by patents):

* real-time processing!

* simple technique to combine informations from background subtraction and semantic segmentation

* enabling the real-time execution of a background subtraction with a non real-time asynchronous stream of semantics

Source code (python, Evaluation License): https://github.com/cioppaanthony/rt-sbs

|

|

|

|

|

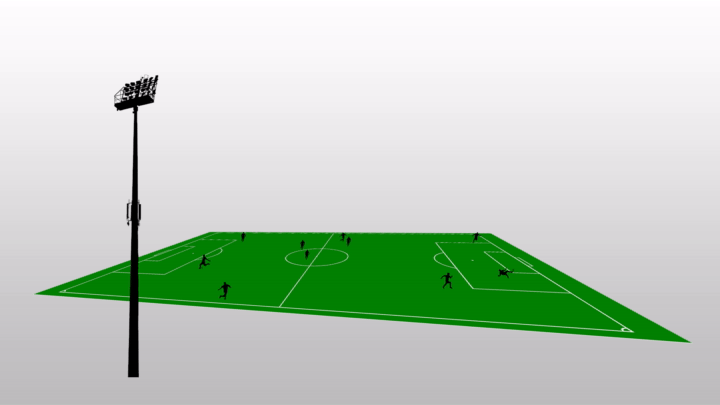

Multimodal and multiview distillation for real-time player detection on a football field (best paper award in CVPR CVSports 2020 workshop).

Innovations:

* real-time player segmentation

* no annotations required * combines an infrared camera and a fisheye camera * ideal for an automatic monitoring and counting players

Source code (python, GNU General Public License v3.0): https://github.com/cioppaanthony/multimodal-multiview-distillation

Publication: [15]

|

|

|

|

|

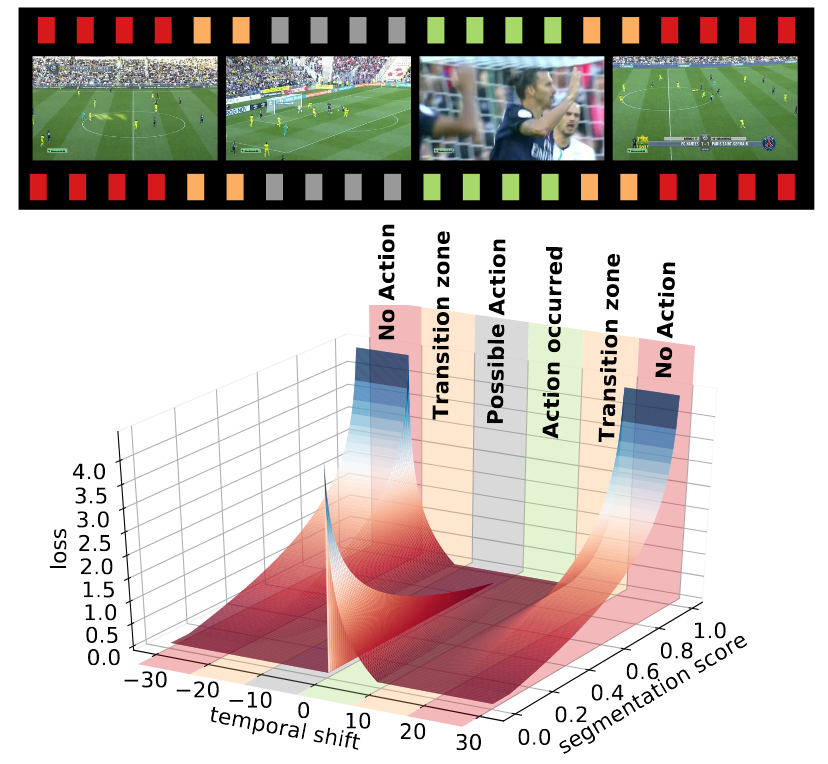

Action spotting in sport videos by machine learning (successfully applied on SoccerNet and on ActivityNet).

Innovations:

* generic loss function specific to action spotting

* allows a context-aware definition of action in the temporal domain * huge increase in performance for action spotting on SoccerNet * automatic highlight generation for soccer

Source code (python, Apache License 2.0): https://github.com/cioppaanthony/context-aware-loss

Publication: [14]

|

|

|

|

|

ARTHuS (generic online distillation for image segmentation): technique to build adaptive real-time match-specific networks for human segmentation, without requiring any manual annotation (best paper award in CVPR CVSports 2019 workshop).

Innovations:

* real-time segmentation of humans in videos

* highly effective real-time human segmentation network that evolves over time

Source code (python, GNU Affero General Public License v3.0): https://github.com/cioppaanthony/online-distillation

Publication: [13]

|

|

|

|

|

Mid-Air: a multi-purpose synthetic dataset for low altitude drone flights. It provides a large amount of synchronized data corresponding to flight records for multi-modal vision sensors and navigation sensors mounted on board of a flying quadcopter.

Innovations:

* large training set (420k images)

* multi-modal sensors (3 cameras, IMU, GPS) * 7 weather conditions, 3 maps

Dataset (Attribution-Non Commercial-Share Alike 4.0 International; CC BY-NC-SA 4.0): downloadable at Mid-Air

Publication: [16]

|

|

|

|

|

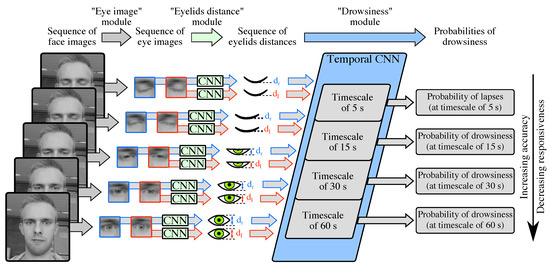

Driver monitoring and Drowsiness monitoring of a driver by video analysis: development of a real time system for drowsiness based on the measurement of eyelids distances

Innovations:

* deep learning-based driver monitoring system comprising 3 modules and 2 proxies (eyelids distance, reaction time)

* multi-scale, responsive, and real-time system for drowsiness monitoring

Source code (python) + dataset: https://github.com/QMassoz/mts-drowsiness

|

|

|

|

|

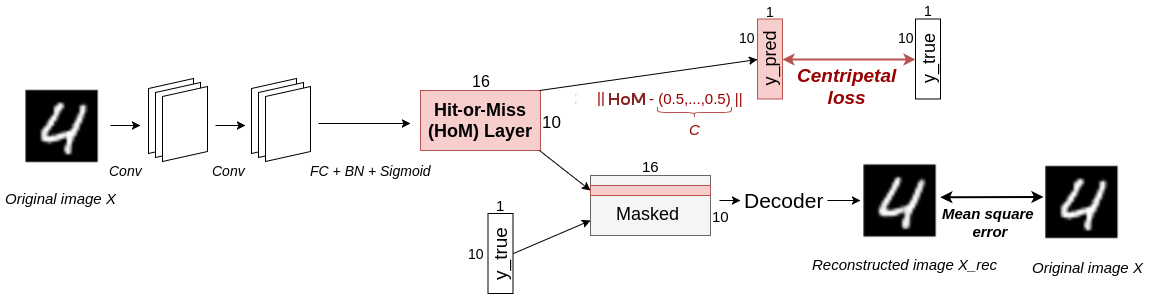

HitNet: an innovative deep neural network design with several key concepts.

Innovations (covered by the patent):

* new output layer based on capsules, named Hot-or-Miss layer (speeds up convergence), and on a centripetal loss function

* first data augmentation technique mixing the data space and the feature space * introduction of the notion of ghosts to allow alternatives and cope with wrong annotations

Source code (python, Educational License): https://orbi.uliege.be/bitstream/2268/225615/2/HitNet.zip

|

|

|

|

|

Stationary Background Generation (LaBGen): algorithms for the generation of a unique representative image of the background of a video or a series of images in the presence of constant occlusions.

Innovations:

* award winning technology

* two working modes: offline and online (that is, one background output image after each addition of an input image)

* real time processing

Source code (C++ code, GNU General Public License v3.0): https://github.com/benlaug/labgen

|

|

|

|

|

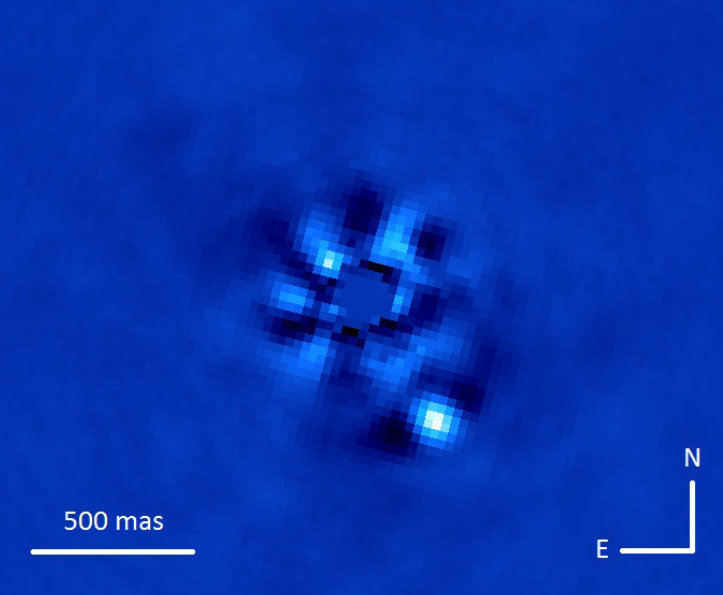

Exoplanet detection: development of imaging techniques based on machine learning for the detection of exoplanets by direct imaging.

Innovations:

* first deep learning-based system for exoplanet detection (SODINN)

* model for the generation of synthetic data * introduction of ROC-like curves for performance evaluation

Source code (python, MIT License): Vortex Image Processing (VIP) package

|

|

|

|

|

Semantic background subtraction: development of techniques to improve motion detection with background subtraction by the incorporation of semantic information, allowing to cope with all the background subtraction challenges simultaneously.

Innovation (covered by patents):

* simple technique to combine informations from background subtraction and semantic segmentation

* enabling the real-time execution of a background subtraction with a non real-time asynchronous stream of semantics |

|

|

|

|

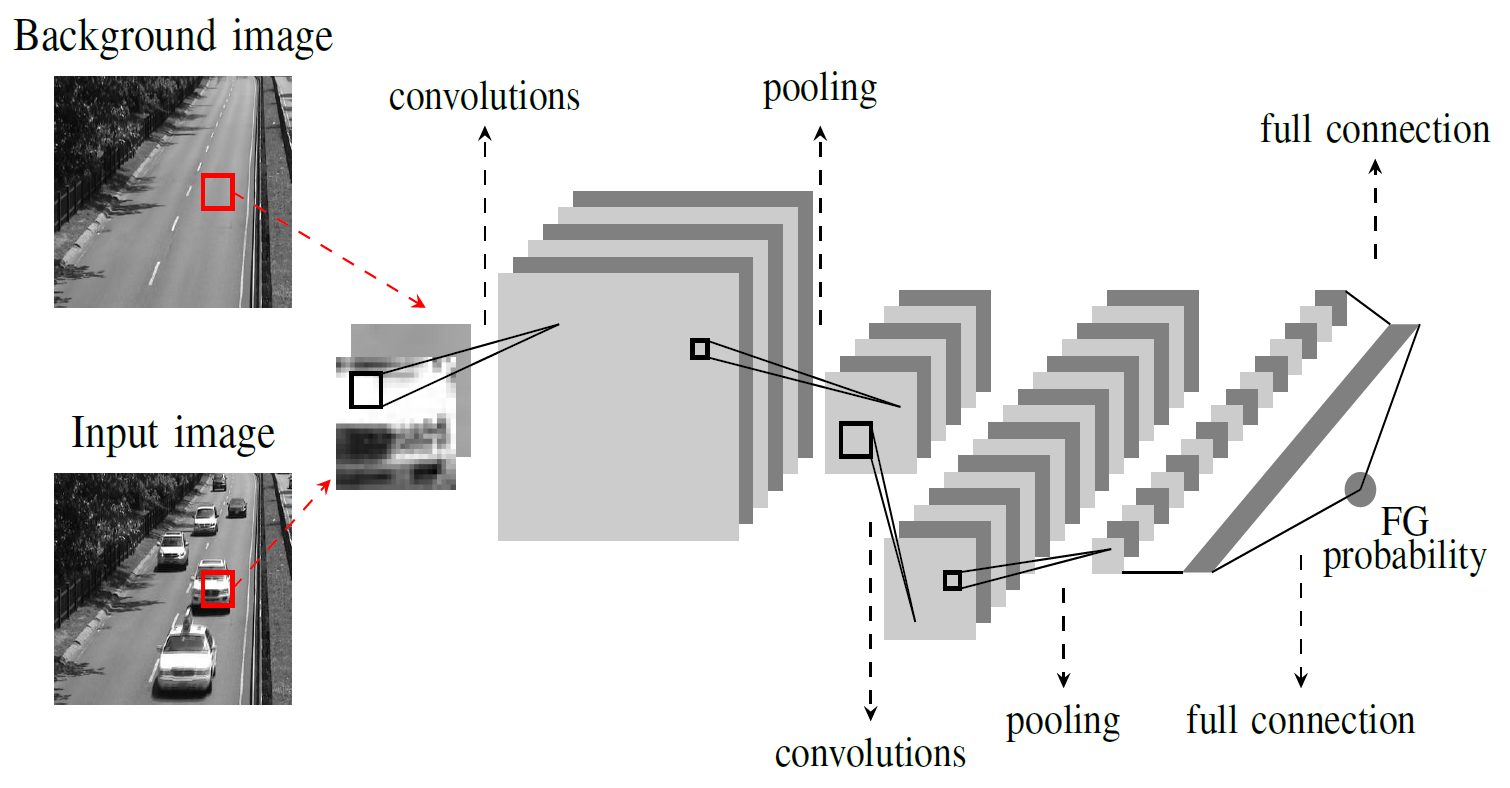

Scene-specific background subtraction: use of deep learning technologies for background subtraction.

Innovations:

* the first method using deep learning concepts for background subtraction

* design to take into account the specificities of a scene * a design for mimicking any unsupervised background subtraction algorithm that can operate in a constant time and run in real time

Publication: [18]

|

|

|

|

|

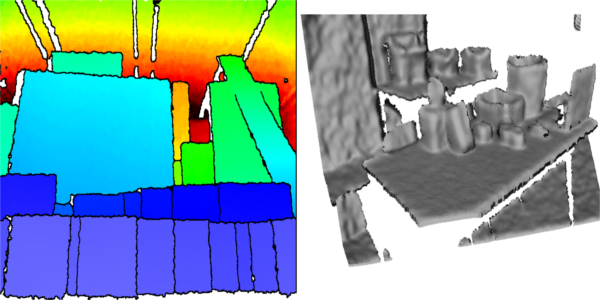

Processing of range images. The use of range sensors, such as the Kinect cameras and time-of-flight devices, is quickly spreading in a large number of computer vision problems. Yet, the basic image processing toolbox (edge detector, noise filtering, interest point detection) for range images still consists in algorithm designed for intensity images. We develop new tools directly aimed at range image processing.

Innovations:

* first probabilistic model for range images

* filter and edge detectors dedicated to range images

Source code (C++, BSD License): http://www.telecom.ulg.ac.be/range-model/ped.tar.bz2

|

|

|

|

|

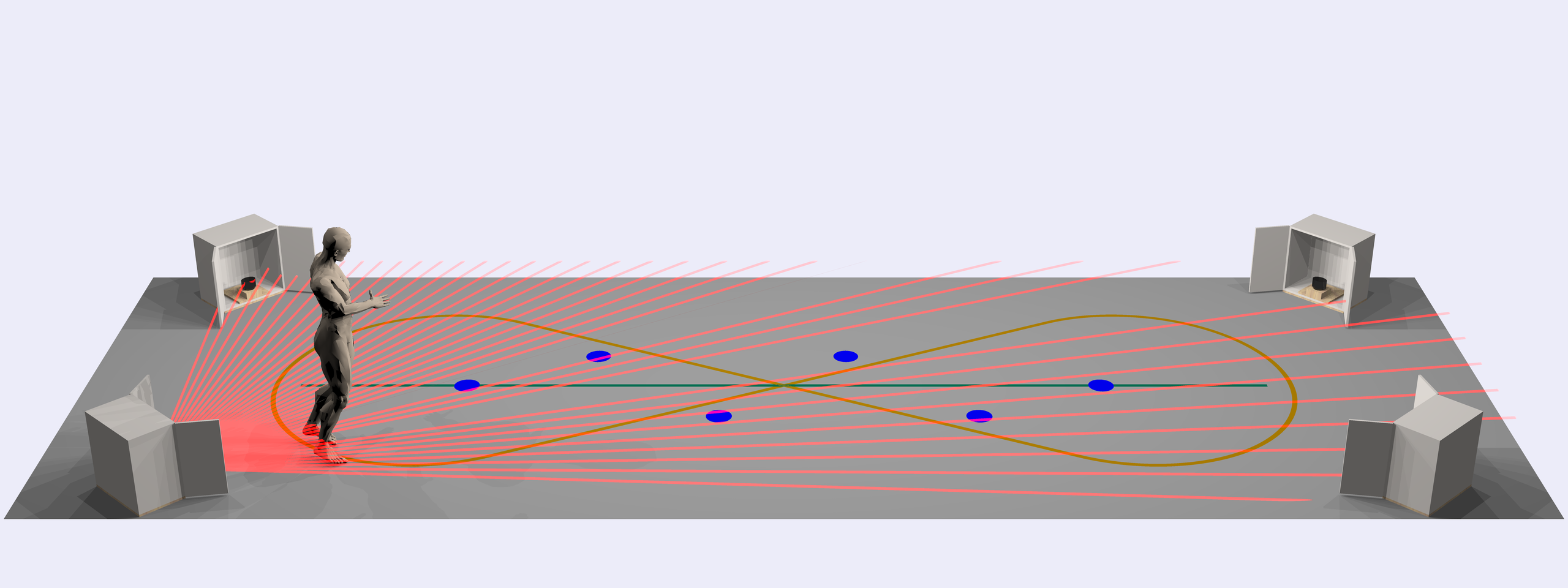

GAIMS [“Gait Measuring System”]: we propose to measure the trajectories of the lower limb extremities with range laser scanners, and to derive various gait characteristics from these trajectories. These characteristics can then be further processed in order to determine some information about the observed person, and for example to help diagnosing various diseases and for the longitudinal follow-up of patients with walking impairments.

Innovations:

* tool for the automatic determination of Expanded Disability Status Scale (EDSS) scores

* complete machine learning-based system for gait analysis * general tool for comparing gait characteristics (based on our large custom dataset) |

|

|

|

|

ViBe: fast and innovative technique for background subtraction.

Innovations

* fastest algorithm for background subtraction based on samples * operations limited to subtractions, comparisons and memory manipulation * patented technology including the following novelties: a technique for model initialization, a random time sampling strategy, a spatial propagation strategy, the backwards analysis of images in a video stream. * ViBe forms the basis of all the current unsupervised state-of-the-art algorithms for background subtraction

Source code (C/C++, Evaluation License): C, C++, benchmarking programs for Linux + Windows

|

|

|

|

|

Innovation:

* real time encryption of compatible JPEG and MJPEG

* encryption can be limited to some areas |

|

|

|

|

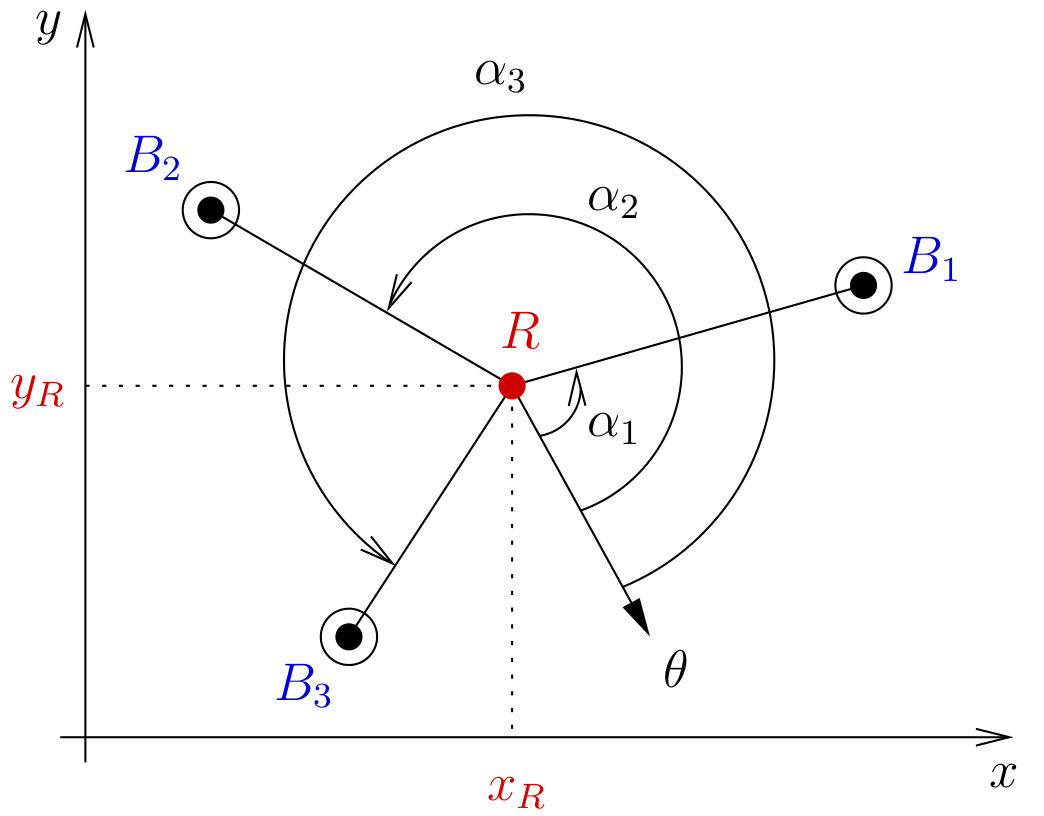

Robot triangulation: a new three object triangulation algorithm for mobile robot positioning (also called the Resection Problem).

Innovations:

* fastest algorithm for triangulation (called ToTal)

* the algorithm provides, at no additional cost, a quality index for the result

Source code (C, Educational License): Implementation of 18 triangulation algorithms (including our ToTal algorithm)! on github

|

|

|

|

|

libmorpho is an open software library that implements several basic operations of mathematical morphology: erosions, dilations, openings, and closings by lines, rectangles, or arbitrary shaped structuring elements or by structuring functions.

Innovations:

* fastest implementation of morphological operations with rectangular structuring elements on CPUs (even today, in 2021!)

* allows the computation of morphological operations with any arbitrary shaped structuring element

Source code (ANSI C, GNU General Public License): libmorpho in AINSI C on github

|

|

|

|

|

CINEMA and AURALIAS: gesture recognition of a user, who is given the real-time control of auralization and audio spatialization processes.

Innovations:

* gesture-based control of a sound environment

* user-specific auralization (including user localization and audio generation)

Publication: [34]

|

|

|

|

References

[1] . Real-Time Semantic Background Subtraction. IEEE International Conference on Image Processing (ICIP):3214-3218, 2020.

[2] . An Effective Hit-or-Miss Layer Favoring Feature Interpretation as Learned Prototypes Deformations. AAAI Conference on Artificial Intelligence, Workshop on Network Interpretability for Deep Learning:1-8, 2019.

[3] . HitNet: a neural network with capsules embedded in a Hit-or-Miss layer, extended with hybrid data augmentation and ghost capsules. CoRR, abs/1806.06519, 2018. URL https://arxiv.org/abs/1806.06519.

[4] . Evaluation of pairwise calibration techniques for range cameras and their ability to detect a misalignment. International Conference on 3D Imaging (IC3D):1-6, 2014. URL https://doi.org/10.1109/IC3D.2014.7032596.

[5] . Adaptive bilateral filtering for range images. URSI Benelux Forum, 2012.

[6] . A new jump edge detection method for 3D cameras. IEEE International Conference on 3D Imaging (IC3D):1-7, 2011. URL https://doi.org/10.1109/IC3D.2011.6584393.

[7] . Simple median-based method for stationary background generation using background subtraction algorithms. International Conference on Image Analysis and Processing (ICIAP), Workshop on Scene Background Modeling and Initialization (SBMI), 9281:477-484, 2015. URL https://doi.org/10.1007/978-3-319-23222-5_58.

[8] . LaBGen-P-Semantic: A First Step for Leveraging Semantic Segmentation in Background Generation. Journal of Imaging, 4(7):86, 2018. URL https://doi.org/10.3390/jimaging4070086.

[9] . LaBGen-P: A Pixel-Level Stationary Background Generation Method Based on LaBGen. IEEE International Conference on Pattern Recognition (ICPR), IEEE Scene Background Modeling Contest (SBMC):107-113, 2016. URL http://orbi.ulg.ac.be/handle/2268/201146.

[10] . Supervised detection of exoplanets in high-contrast imaging sequences. Astronomy & Astrophysics, 613:1-13, 2018. URL http://hdl.handle.net/2268/217926.

[11] . Low-rank plus sparse decomposition for exoplanet detection in direct imaging ADI sequences: The LLSG algorithm. Astronomy & Astrophysics, 589(A54):1-9, 2016. URL http://hdl.handle.net/2268/196090.

[12] . VIP: Vortex Image Processing package for high-contrast direct imaging. The Astronomical Journal, 154(1):7:1-7:12, 2017. URL https://doi.org/10.3847/1538-3881/aa73d7.

[13] . ARTHuS: Adaptive Real-Time Human Segmentation in Sports Through Online Distillation. IEEE International Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), CVsports:2505-2514, 2019. URL https://doi.org/10.1109/CVPRW.2019.00306.

[14] . A Context-Aware Loss Function for Action Spotting in Soccer Videos. IEEE International Conference on Computer Vision and Pattern Recognition (CVPR):13123-13133, 2020. URL https://doi.org/10.1109/CVPR42600.2020.01314.

[15] . Multimodal and multiview distillation for real-time player detection on a football field. IEEE International Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), CVsports:3846-3855, 2020. URL https://doi.org/10.1109/CVPRW50498.2020.00448.

[16] . Mid-Air: A Multi-Modal Dataset for Extremely Low Altitude Drone Flights. IEEE International Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), UAVision:553-562, 2019. URL https://doi.org/10.1109/CVPRW.2019.00081.

[17] . Survey and synthesis of state of the art in driver monitoring. Sensors, 21(16):1-49, 2021. URL https://doi.org/10.3390/s21165558.

[18] . Deep Background Subtraction with Scene-Specific Convolutional Neural Networks. IEEE International Conference on Systems, Signals and Image Processing (IWSSIP):1-4, 2016. URL https://doi.org/10.1109/IWSSIP.2016.7502717.

[19] . Semantic Background Subtraction. IEEE International Conference on Image Processing (ICIP):4552-4556, 2017. URL https://doi.org/10.1109/ICIP.2017.8297144.

[20] . Image classification using neural networks. 2018. URL https://orbi.uliege.be/handle/2268/226179.

[21] . Foreground and background detection method. 2020.

[22] . Foreground and background detection method. 2019.

[23] . Foreground and background detection method. 2019.

[24] . ViBe: A Disruptive Method for Background Subtraction. In Background Modeling and Foreground Detection for Video Surveillance . Chapman and Hall/CRC, Jul 2014. URL https://doi.org/10.1201/b17223.

[25] . Visual Background Extractor. 2011.

[26] . Visual background extractor. 2009. URL https://patentscope.wipo.int/search/en/detail.jsf?docId=WO2009007198.

[27] . Visual background extractor. 2011.

[28] . Visual background extractor. 2010.

[29] . Background Subtraction: Experiments and Improvements for ViBe. Change Detection Workshop (CDW), in conjunction with CVPR:32-37, 2012. URL https://doi.org/10.1109/CVPRW.2012.6238924.

[30] . Techniques for a selective encryption of uncompressed and compressed images. Advanced Concepts for Intelligent Vision Systems (ACIVS):90-97, 2002. URL http://www.ulg.ac.be/telecom/publi/publications/mvd/acivs2002mvd/index.html.

[31] . The VORTEX project: first results and perspectives. Adaptive Optics Systems IV, 9148, 2014. URL https://doi.org/10.1117/12.2055702.

[32] . ViBe: A universal background subtraction algorithm for video sequences. IEEE Transactions on Image Processing, 20(6):1709-1724, 2011. URL https://doi.org/10.1109/TIP.2010.2101613.

[33] . Multi-Timescale Drowsiness Characterization Based on a Video of a Driver's Face. Sensors, 18(9):1-17, 2018. URL https://doi.org/10.3390/s18092801.

[34] . A video-based human-computer interaction system for audio-visual immersion. Proceedings of SPS-DARTS 2006:23-26, 2006.

[35] . Defining a score based on gait analysis for the longitudinal follow-up of MS patients. Multiple Sclerosis Journal, 23(S11):408-409, 2015. URL http://orbi.ulg.ac.be//handle/2268/184249. Proceedings of ECTRIMS 2015 (Barcelona, Spain), P817.

[36] . Understanding how people with MS get tired while walking. Multiple Sclerosis Journal, 23(S11):406, 2015. URL http://orbi.ulg.ac.be/handle/2268/184207. Proceedings of ECTRIMS 2015 (Barcelona, Spain).

[37] . Design of a reliable processing pipeline for the non-intrusive measurement of feet trajectories with lasers. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP):4399-4403, 2014. URL https://doi.org/10.1109/ICASSP.2014.6854433.

[38] . GAIMS: A Reliable Non-Intrusive Gait Measuring System. ERCIM News, 95:26-27, 2013. URL http://hdl.handle.net/2268/157553.

[39] . A New Three Object Triangulation Algorithm for Mobile Robot Positioning. IEEE Transactions on Robotics, 30(3):566-577, 2014. URL https://doi.org/10.1109/TRO.2013.2294061.

[40] . BeAMS: a Beacon based Angle Measurement Sensor for mobile robot positioning. IEEE Transactions on Robotics, 30(3):533-549, 2014. URL https://doi.org/10.1109/TRO.2013.2293834.

[41] . Morphological erosions and openings: fast algorithms based on anchors. Journal of Mathematical Imaging and Vision, Special Issue on Mathematical Morphology after 40 Years, 22(2-3):121-142, 2005. URL https://doi.org/10.1007/s10851-005-4886-2.

[42] . Fast computation of morphological operations with arbitrary structuring elements. Pattern Recognition Letters, 17(14):1451-1460, 1996. URL https://doi.org/10.1016/S0167-8655(96)00113-4.

[43] . Partial encryption of images for real-time applications. IEEE Signal Processing Symposium:11-15, 2004. URL http://www.ulg.ac.be/telecom/publi/publications/mvd/sps-2004/index.html. Invited presentation.