Summarization: towards a robust technique for aggregating the results of multiple experiments in classification

Often, in classification, we have to aggregate the experimental results of different experiments. While the summarization by averaging performance indicators is a valuable effort to standardize the evaluation procedure, it has no theoretical justification and it breaks the intrinsic relationships between summarized indicators. This leads to interpretation inconsistencies. In this paper, we present a theoretical approach to summarize the performances for multiple experiments that preserves the relationships between performance indicators. In addition, we give formulas and an algorithm to calculate summarized performances. Our first showcase was that of CDNET (A video database for testing change detection algorithms) (see¬†3‚Üď hereafter).

Theoretical idea

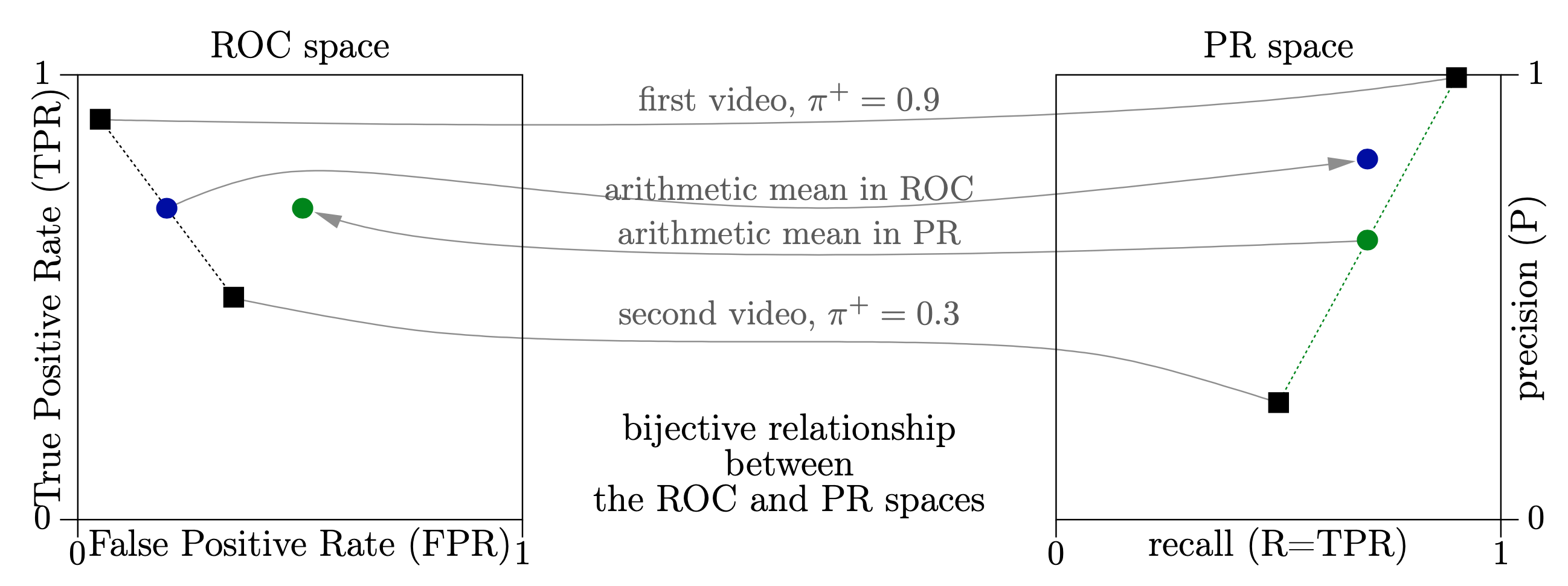

Figure 1 How can we summarize the performances of a background subtraction algorithm, or any other type of binary classifier, measured on several videos? This figure shows that arithmetically averaging the performances breaks the bijective relationship that exists between the ROC and Precision-Recall (PR) spaces [1].

This is the reason why we have introduced the notion of summarization. The principle is described in the paper Summarizing the performances of a background subtraction algorithm measured on several videos (follow this link for more details).

Technical material

- Original paper: Summarizing the performances of a background subtraction algorithm measured on several videos [1]

- PDF presentation at the conference

- Video presentation

Showcase

First showcase: we have applied summarization to the CDNET (A video database for testing change detection algorithms) database. All our results are available hereafter. You can also get the spreadsheet version of it.

References

[1] . Summarizing the performances of a background subtraction algorithm measured on several videos. IEEE International Conference on Image Processing (ICIP), 2020. URL http://hdl.handle.net/2268/247821.